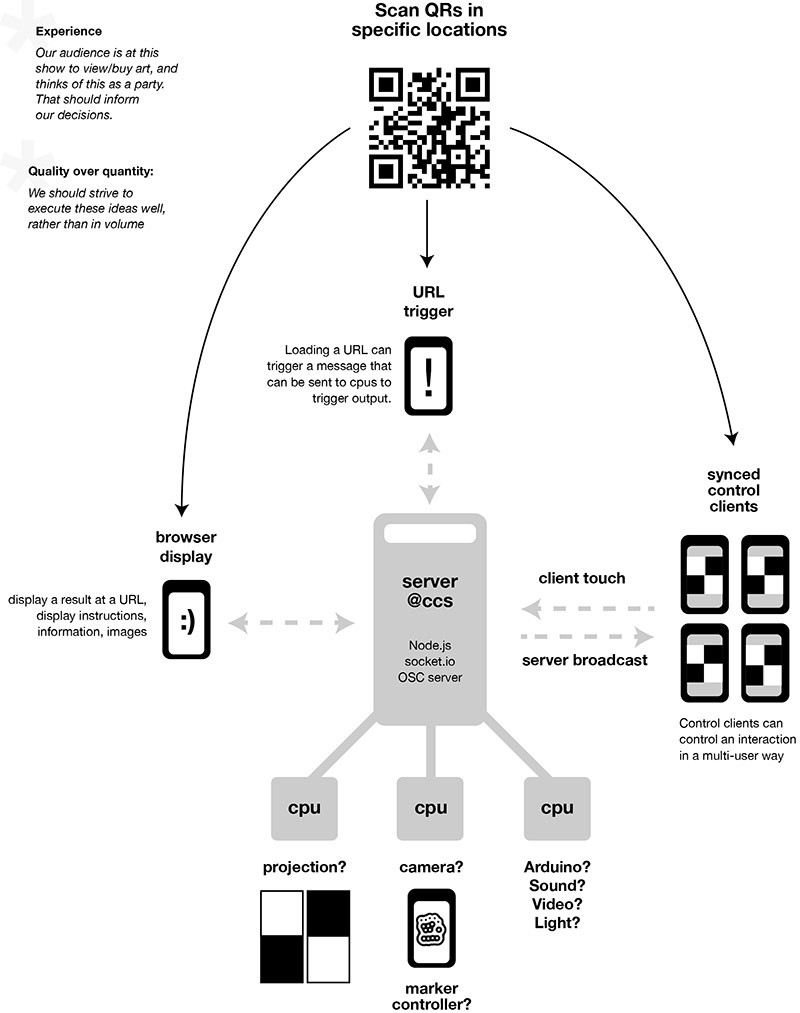

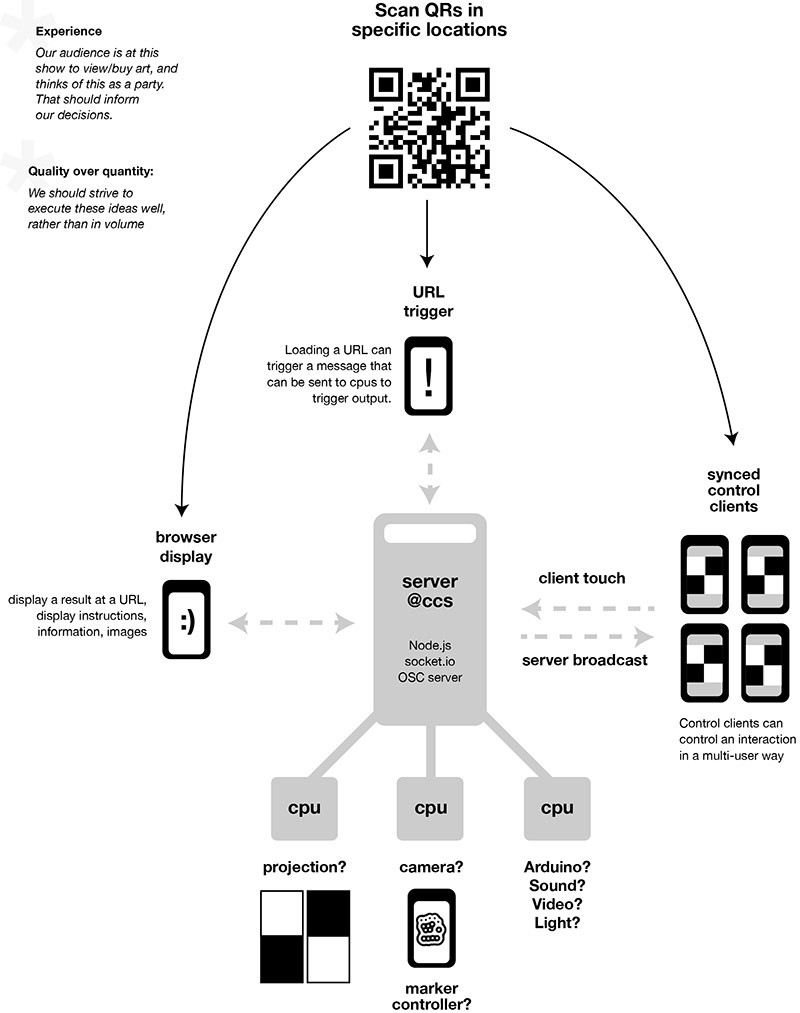

We were looking for a way to create interactions in the natural spaces of the building, on the walls, in the windows, and to avoid the “kiosk” interface as much as possible. By choosing the QR, we elected to leverage the user’s smartphone as the main user interface for the installation.

By now, most people at least recognize the form of the QR code, but many don’t yet know what they are used for. The QR code is most commonly used as a hyperlink to product or service information, and that’s how most people currently understand their usage. Our goal was to create a new context for the QR code for those users with prior experience, as well as introduce this use of the QR to a new audience.

We did know that the amount of show-goers with smartphones might be somewhat limited, but accepted the constraint. To simplify development, we limited support to touchscreen devices running Android and iOS. We attempted but found it difficult to support Blackberry devices, so they were only partially functional. (It’s worth noting that it was surprising to see how many designers/creatives use Blackberry devices; my stereotype of the Blackberry user has been shattered!)

Normally this kind of real-time interaction is easier to accomplish via a device-native solution like an iPhone or Android application, but we chose the web application instead, for its compatibility across platforms. Those without smartphones didn’t seem to be any less engaged, as each interaction would draw as many onlookers as participants. Perhaps the most interesting aspect to the SEO event was watching the discovery process, having conversations with curious people, and witnessing the “A-ha” moments first-hand.

Skip this part if you don’t like to see the innards.

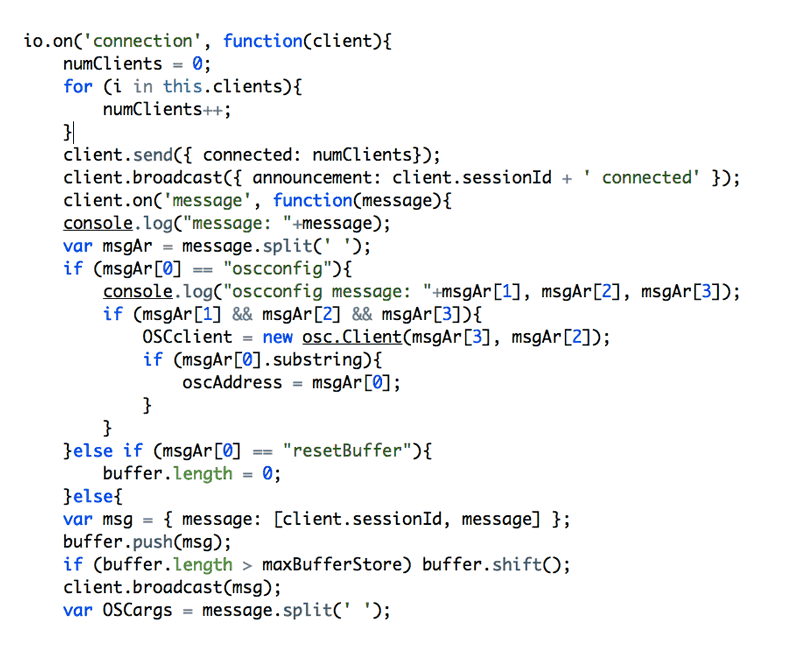

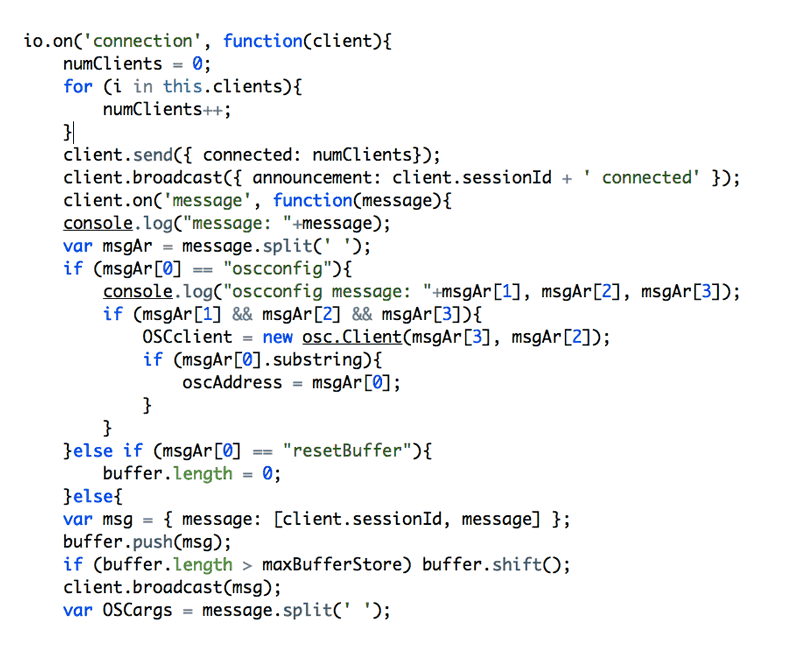

We needed a means for the user to communicate with our interactive pieces, and a way for the pieces to chat amongst themselves. We decided to use a common “language” that applications could all speak, and a natural fit for this was the OSC protocol (Open Sound Control.) OSC was an easy learning curve with wide adoption and libraries for several interactive frameworks, like Processing, Actionscript, Arduino and Open Frameworks.

We then needed traffic control for all this communication, so we chose an open-source JavaScript framework called Node.js, in combination with Node modules Socket.io and Node-OSC. This server cocktail “made things talk”, and allowed us to write applications that receive real time input from smartphone clients, with the ability to sync and broadcast to all clients. The server can then serve/share OSC data with multiple interactive applications as needed.

The important part of this solution was that it allowed us to keep all the client interfaces web-based, allowing interaction from 20ft or 20 miles. And since the entire communication solution is written in JavaScript, it’s powerful and flexible.

It’s worth noting that toward the end of the development cycle we discovered the value of high-level frameworks like Express and Now.js… these probably would have saved us a good deal of time, but we certainly learned a lot in the process anyway. It’s also not clear if the asynchronous nature of Node helped with performance or throughput, but it would be interesting to test in the future.

Next time!

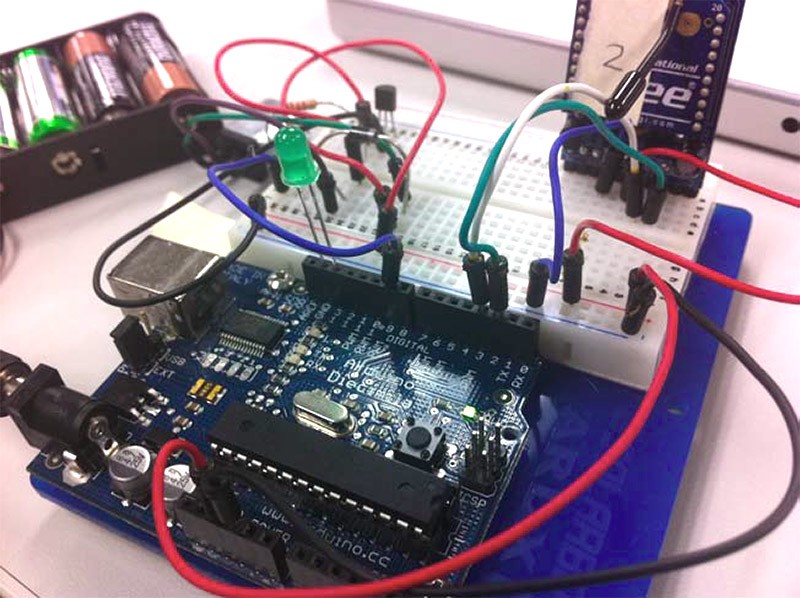

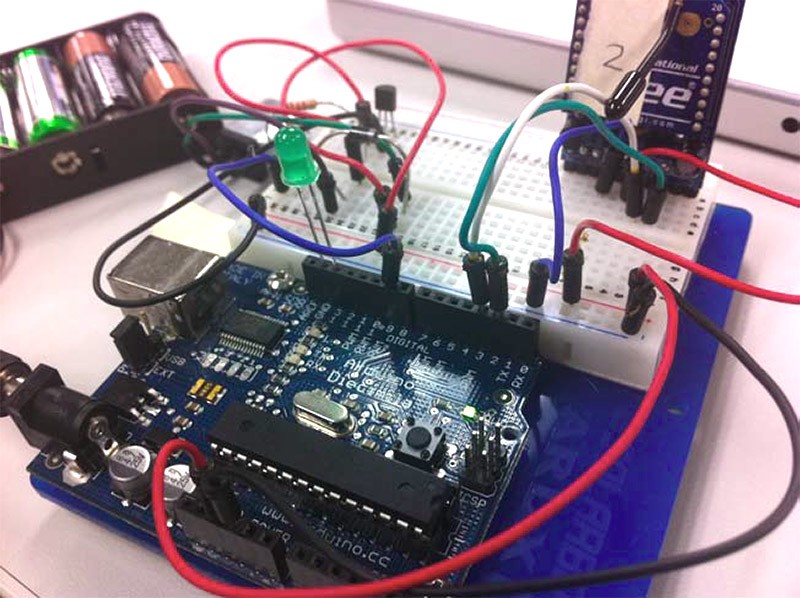

These technologies allowed teams to create virtually-controlled physical displays. Mobile clients talking to servers via the internet, servers talking to computers on a local network running Flash and Processing, Computers talking (sans wires) to Arduino microcontrollers. All in all a pretty powerful and flexible system, I hope we continue to build on it!